(Because of intense interest in this post, we archived it as a technical report at http://graphics.cs.williams.edu/papers/EnvMipReport2013/ suitable for scientific citation)

This post describes a trick used in the G3D Innovation Engine 9.00 to produce reasonable real-time environment lighting for glossy surfaces. It specifically adds two lines of code to a pixel shader to reasonably approximate Lambertian and glossy reflection (under a normalized Phong model) of a standard cube map environment. This trick resulted from an afternoon discussion/challenge last week in the Williams Computational Graphics lab between Morgan McGuire (that's me), my summer 2013 research students, Dan Evangelakos (Williams), James Wilcox (NVIDIA and University of Washington), Sam Donow (Williams), and my NVIDIA colleague and former student Michael Mara.

There are many more sophisticated ways of computing environment lighting for real-time rendering (see Real-Time Rendering's survey or a more informal Google search). Unfortunately, those techniques tend to require an offline asset build step, so they can't be directly applied to dynamic light probes, which are particularly interesting to us...although there are some clever things that one can do with spherical harmonics to reduce the cost of that precomputation a lot. Those methods also tend to require a specific setup for the renderer that doesn't work with our default shaders. For research and hobbyist rendering, one often has to work with off-the-shelf or legacy assets. This situation also arises in production more than one might think, especially when working with games that run across a variety of devices.

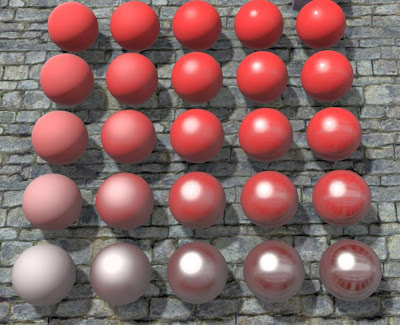

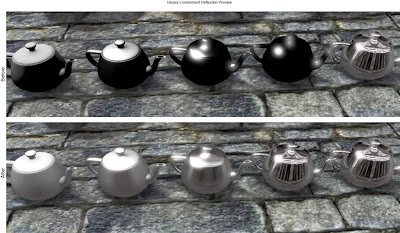

|

| A series of increasingly shiny teapots, before and after applying this technique. In the before row, only the perfect-mirror (rightmost) teapot reflects the environment. |

While this is a trick that probably won't interest next-generation console developers or the research community, we're posting it for two reasons. First, it may be useful to a number of mobile game developers, students, and hobbyists, because it is a lot better than having no approximation of environment lighting at all. After all, it improved our images with one hour of math and one minute of coding (about the right ratio in general!), and we can save others the one hour of math. Although, frankly, this trick may also be useful to more "sophisticated" productions and tools; Maya uses simple environment MIP maps for lighting and doesn't even use our approximation to conserve energy or match glossy highlights.

Second, this is a nice example of how back-of-the-envelope computations and a principled understanding of the physics of rendering can very quickly yield practical improvements in image quality. That's the approach that my (other) coauthors and I advocate in Computer Graphics: Principles and Practice, and the one that I teach and use daily in my own work. The difference between a "bad hack" and a "good hack" (or, "principled approximation," if you prefer) is that the good hack is inherently robust and has known sources of errors because it arises from intentional simplification instead of fickle serendipity.

Background

|

| Area lights and strong indirect reflectors (from Digital Camera World) |

.jpg) |

| Area lights and strong indirect reflectors (from Digital Camera World) |

|

| Harsh CG point lighting (from Computer Graphics: Principles and Practice 3e sample chapter on rasterization and ray tracing) |

The simplest technique for lighting a dynamic object is environment lighting. In this case, a cube map or other parameterization of the sphere stores the amount of light arriving from each direction, which is just a picture of the world around the object. Ideally, this cube map would be updated every frame to reflect changes in that lighting environment. However, it works surprisingly well even if the cube map is static, if there is a single cube map used throughout the entire scene despite the view changing, or even if the cube map doesn't look terribly much like the actual scene. The application of maps of indirect light for mirror reflection (environment mapping) was first introduced by Jim Blinn in 1976.

|

| The first use of environment reflection in a feature film: Flight of the Navigator (1985) |

The convolution produces a convenient duality that a rough, matte surface under a point light looks

|

| Skyshop's preconvolved environment maps |

Key Idea

Our goal is to approximate the convolution of an environment map with a cosine-power lobe parameterized on exponent $s$ using a conventional cube map MIP chain. For Lambertian terms we'll estimate shading with a cube map sample from the lowest MIP (approximating cosine to the first power) about the normal, and for glossy terms we'll light with a cube map sample dictated by glossy exponent sampled about the reflection vector. We just need a formula for the MIP level to sample as a function of the glossy exponent. That's what the rest of this post is about.Limitations

We ignore the fact that the tangent plane masks the reflection at glancing angles, a common problem even in the good convolution techniques. Since the conventional MIP chain uses simple $2\times 2$ averaging at each level and only within faces, and we then use (seamless) bilinear cubemap sampling, our final samples will represent convolution with a pyramid-density distorted quad instead of a power-cosine hemisphere. This is a source of error. It will be minimized near the center of faces and at high exponents (shiny surfaces) and worst at low exponents and corners. If the scene on average is about as bright (the lowest frequencies are nearly constant) in all directions despite lots of high frequency detail (such as in Sponza), then the approximation will work well. If the scene has highly varying illumination as a function of angle (such as in the Cornell Box), then everything will look slightly shinier than it should be because the lowest MIP levels do not actually blur across cube map faces. These approximation errors could be minimized by taking multiple texture samples from the cube map...but if we cared about it that much, we would probably use a better method than sampling MIP maps. So we're going to accept these limitations and ask what is the best we can reasonably do with two texture fetches (Lambertian + glossy) from a conventional MIP map.Derivation

The uniform weighted spherical cap of angle $\phi$ from center to edge subtends a solid angle of$$2\pi \int_0^{\phi} \sin \theta~d\theta = 2\pi(1 - \cos \phi).$$

This is just the integral of 1 over the cap; the sin term arises from the spherical parameterization in which it is easiest to take the integral. A power-cosine ($\cos^s \theta$) weighted hemisphere, such as the one arising in the Blinn-Phong factor $(\hat{\omega}_\mathrm{h} \cdot \hat{\omega}_\mathrm{i})^s$, subtends a weighted solid angle with measure

$$\Gamma = 2\pi \int_0^{\pi/2} \cos^s \theta \sin \theta ~ d\theta $$

(recall that we're ignoring the tangent plane clipping by not clamping that Blinn-Phong term against zero). Evaluating this integral shows us that in a completely uniform scene, the glossy reflection will have magnitude proportional to the solid angle

$$\Gamma = \frac{2\pi}{s + 1}.$$

A cube map contains six $w \times w$ (where $w$ is "width"; the faces are square) faces and covers the whole sphere of $4\pi$ steradians. MIP level $m$ contains $6 (w 2^{-m})^2$ pixels. A cube map texel at level $m$ thus subtends

$$T = \frac{4\pi}{6 (w ~ 2^{-m})^2} $$

steradians on average, with the ones at the corners covering less and the ones at the centers of faces covering more. We just need to know what MIP-level $m$ approximates integrating the same solid angle as the cosine exponent $s$, so we let $\Gamma = T$ and solve for $m$.

$$\frac{2\pi}{s + 1} =\frac{4\pi}{6(w ~ 2^{-m})^2}$$

$$\frac{2^{2m}}{3w^2} = \frac{1}{s + 1}$$

$$2^{2m} = \frac{3w^2}{s + 1}$$

$$2m = \log\left(\frac{3w^2}{s+1}\right)$$

$$2m = \log(3w^2) + \log\left(\frac{1}{s+1}\right)$$

$$m = \frac{1}{2}\log(3w^2) + \frac{1}{2}\log\left(\frac{1}{s+1}\right)$$

$$m = \log(w\sqrt{3}) + \frac{1}{2}\log \left(\frac{1}{s + 1}\right)$$

$$m = \log(w\sqrt{3}) - \frac{1}{2}\log(s + 1)$$

All logarithms are base 2 in this derivation. The $\log(w\sqrt{3})$ term depends solely on the cube map's MIP 0 resolution and can be precomputed, so we need only evaluate one logarithm and one fused multiply-add instruction per pixel. The logarithm is obviously the more expensive part. The cost of one logarithm in our pixel shader is insignificant compared to the other shading math, but one could store $\log(s + 1)$ in addition to $s$ in the roughness texture map if it was a major concern.

We're now ready for the two lines of code:

float MIPlevel =

log2(environmentMapWidth * sqrt(3)) -

0.5 * log2(glossyExponent + 1);

#if (__VERSION__ >= 400) || defined(GL_ARB_texture_query_lod)

MIPlevel = max(MIPlevel, textureQueryLod(environmentMap, worldSpaceReflectionVector).y);

#endif

gl_FragColor.rgb =

lambertianCoefficient * textureCubeLod(environmentMap,

worldSpaceNormal,

maxMIPLevel).rgb +

glossyCoefficient * textureCubeLod(environmentMap,

worldSpaceReflectionVector,

MIPlevel).rgb;

How one computes the Lambertian and glossy coefficients depends on the preferred shading model. We use the energy-conserving normalized Blinn-Phong with a Fresnel term, as described in Real-Time Rendering and the Graphics Codex. Note that this environment illumination superimposes on the direct illumination, and should produce highlights from light sources in the environment map that are similar to those of direct light sources, as shown in the teapot image at the top of this post. We also use ambient obscurance to modulate the glossy environment reflection for low exponents, since the entire hemisphere should not be integrated in those cases. We're including our full material model and GLSL shader in the version 9.00 release of the G3D Innovation Engine set for release at the end of August.

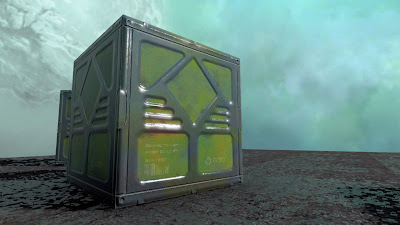

|

| Artifacts from our approximations are least visible on complex, textured geometry, such as this glossy bump-mapped crate reflecting a relatively homogeneous alien planet skybox. |

Morgan McGuire is a professor of Computer Science at Williams College, visiting professor at NVIDIA Research, and a professional game developer. He is the author of The Graphics Codex, an essential reference for computer graphics that runs on iPhone, iPad, and iPod Touch.

Morgan McGuire is a professor of Computer Science at Williams College, visiting professor at NVIDIA Research, and a professional game developer. He is the author of The Graphics Codex, an essential reference for computer graphics that runs on iPhone, iPad, and iPod Touch.

I'm Morgan McGuire (

I'm Morgan McGuire (